Introduction

In the ever-evolving world of quantitative finance, speed and structure are paramount. With the increasing demand for real-time insights, the ability to automate report generation while ensuring mathematical integrity is a key differentiator. While the broader focus often remains on alpha generation, there's immense value in augmenting infrastructure that automates data processing, analysis, and structured insight generation.

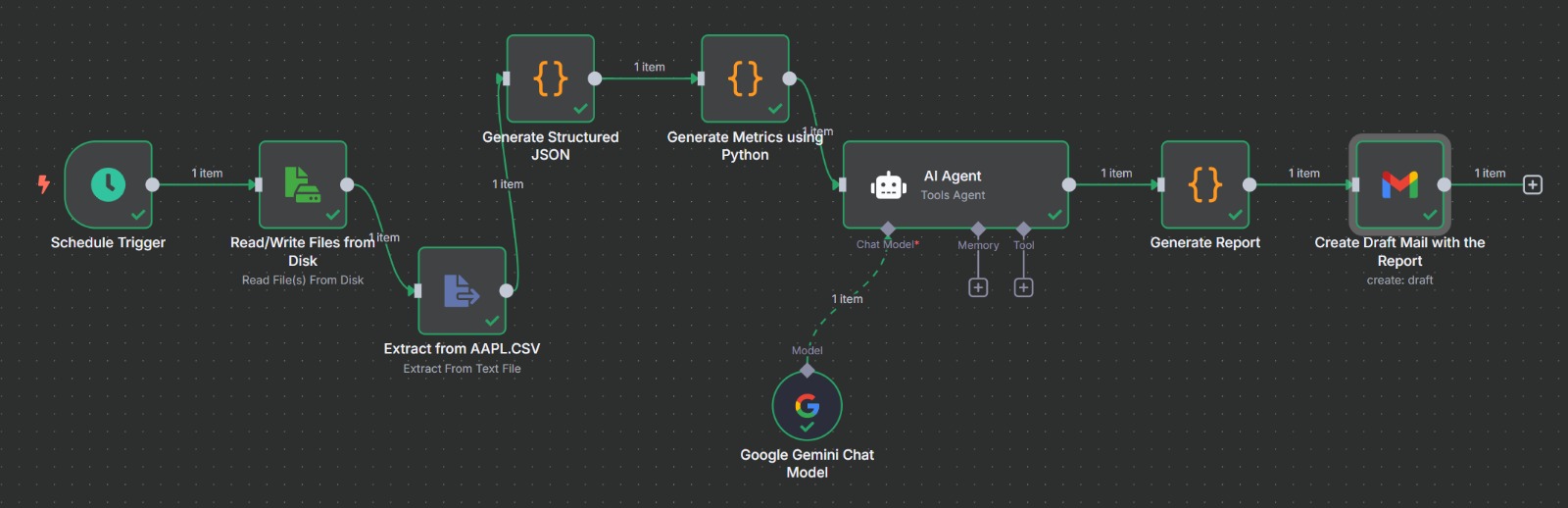

This article outlines a streamlined approach to creating a Market Monitor Agent—a lightweight automation pipeline that integrates AI (in our case, Google Gemini), Python-based analytics, and a workflow orchestration platform like n8n. The goal is to transform raw market data into concise, structured, and timely reports that remain faithful to the quantitative logic defined by an underlying algorithm.

Architecture Overview

At its core, the system is designed to ingest equity time series data (e.g., daily OHLCV of AAPL), calculate relevant technical metrics, prompt Gemini AI to interpret this data, and produce a structured market report delivered directly as a Gmail draft or via Slack/HTTP endpoint.

Core Components

The system architecture leverages several key technologies working in harmony. N8n serves as the open-source workflow automation platform orchestrating the entire data pipeline. Python handles all deterministic computation of technical metrics, including moving averages and volatility calculations, ensuring mathematical accuracy. Google Gemini is utilized strictly for structuring natural language summaries—never for quantitative computation—maintaining the integrity of the numeric analysis. Finally, Gmail integration automatically drafts a structured report for review and delivery, completing the automated workflow.

Metrics Captured

The current implementation supports a range of technical indicators, all derived from clean historical price data. These metrics include:

The system tracks both Simple and Exponential Moving Averages (SMA/EMA) over custom lookback windows, providing trend visibility across different time horizons. The Relative Strength Index (RSI) offers insights into momentum and potential reversal points. Bollinger Bands help identify volatility expansions and contractions that often precede significant price movements. Average True Range (ATR) measurements quantify market volatility in absolute terms, while crossover signals (such as 20-day vs. 50-day moving averages) highlight potential trend changes. Additional metrics include daily returns, drawdowns, and volatility buckets for risk assessment, along with breakout and support/resistance analysis based on historical price action.

These metrics form the foundation of the quantitative logic and are computed prior to any interaction with the LLM, ensuring fidelity to the defined trading algorithm.

Guardrails Against Hallucination

One of the common concerns in leveraging large language models in financial workflows is the risk of hallucination—fabricated insights not supported by data. To mitigate this:

The system employs multiple safeguards to ensure data integrity and prevent fabricated insights. All numerical metrics are precomputed using Python, with no calculations delegated to Gemini. This separation of responsibilities ensures mathematical precision. The prompt engineering is deliberately constrained, only requesting summaries or contextual framing of precomputed data rather than novel analysis. JSON-format inputs are fed into Gemini, providing a rigid structure that guides the LLM with deterministic parameters and boundaries. Additionally, all output is further parsed and validated to confirm alignment with known metrics before being finalized, creating multiple layers of verification.

This ensures the model operates strictly within a descriptive boundary, functioning more like a summarization layer than an analytical agent.

Sample JSON Prompt to Gemini

Gemini is invoked using a rigorously structured JSON prompt, ensuring it stays confined to a descriptive, fact-based narrative. This prompt is programmatically generated via our Python logic. Example:

Example Output from Gemini:

AAPL closed at $185.13 today, continuing above both its 20-day ($183.42) and 50-day ($179.75) moving averages. The RSI remains elevated at 62.5, suggesting momentum remains strong but not yet overbought. A bullish crossover persists as the 20-day SMA continues to lead. Volatility is steady at 1.74%, and the recent drawdown is within normal bounds.

Integration with Proprietary Algos

For teams already deploying proprietary trading algorithms, this system can be tightly coupled with existing signal engines. The generated metrics—derived from the same feature engineering pipelines used in your alpha models—can be fed into this agent for reporting or monitoring purposes.

Examples include monitoring signal strength, execution slippage, or spread deterioration to identify potential issues with trading execution. The system can report on volatility regime shifts that trigger conditional model adjustments, ensuring strategies adapt to changing market conditions. It can also generate daily trade rationales from structured backtest metadata, providing a clear narrative for each trading decision.

This offers not just operational transparency, but a quantifiable audit trail of how signals are evolving in production.

Applications and Future Vision

The modular nature of the agent allows for several extensions:

The system's flexibility enables seamless integration with various communication platforms. It can plug into Slack, Telegram, or internal dashboards for real-time desk-level alerts, ensuring timely delivery of market insights. It can integrate with APIs from Interactive Brokers or Alpaca for trade-linked summaries, providing context alongside execution data. For broader coverage, the system can schedule intraday or EOD reporting at scale across hundreds of tickers simultaneously. Future enhancements could incorporate LSTM or RL-based predictive overlays that evolve report narratives dynamically, adapting to changing market conditions and user preferences.

What began as a simple report automation concept can become a real-time market monitoring layer that complements both discretionary and systematic desks.

Final Thoughts

This architecture represents a practical application of LLMs in quant infrastructure—not as decision-makers, but as structured communicators. By separating the analytical layer from the generative layer, and building tight guardrails into the system, we can maintain full control over the math while benefiting from narrative synthesis. As quant teams continue scaling their infrastructure, such agents offer a compelling way to close the loop between data, decisions, and delivery—without compromising on accuracy.